Fighting bias in security analysis

I am a huge fan of automation; I strongly believe that automation, machine learning and / or artificial intelligence (whatever these terms mean for different people) are our best chance to tackle one of the biggest problems we have in the cyber security industry: the human limitations.

As the amount of transactional and log data generated and in need of analysis is growing, the option to throw traditional resources to the problem (in this case: human minds) has disappeared for more than a decade now. I know that there are many companies not using any analysis automation tools even today, but that is a different situation. These companies are not going anywhere - they are just set to find out their breach details some years later. If they're lucky, before they hit the headlines.

Most companies though are utilizing machine analysis and correlation to cut the noise. That is the right decision, but one needs to be aware of some potential issues. These issues are enhanced when machine learning is used, due to the lower number of human minds involved in the process.

Human - performed analysis

No matter what technology one uses today, there is always human involvement in a significant degree. This involvement is on the definition of the rules of what is important and what not; which is exactly the place where human bias comes into place. Any triage function is after the alert is generated, but what happens if an alert is never generated because the analysis system is biased?

I recently came across Buster Benson's cognitive bias cheat sheet. Except for the fact that it's an interesting and refreshing reading, one can so much correlate to cyber security log analysis bias that it's scary.

Machine learning / machine - performed analysis

There are two major models of machine learning: supervised and unsupervised. You may learn more about these models by reading several articles: I suggest this one which is appropriate for all levels of understanding and depths of prior knowledge. The caveats presented apply at a different level to different models. But the bottom line is: they apply and the more we keep that in mind, the better prepared we are.

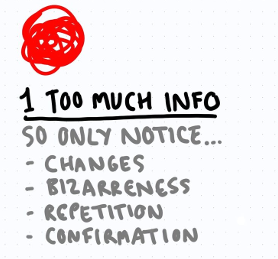

Too much information

As there is too much information available, us humans tend to only notice outliers and changes in patterns. We only notice when something significantly changes. In addition, we tend to ignore information that contradicts our existing beliefs.

As there is too much information available, us humans tend to only notice outliers and changes in patterns. We only notice when something significantly changes. In addition, we tend to ignore information that contradicts our existing beliefs.

Take that into the log analysis. Any rules one may design will have the same disadvantages:

- Brute force attacks and spraying attacks may be identified through thresholds - the equivalent of changes in patterns and outliers

- Repeated alerts that are considered to be false positive will be snoozed and ignored instead of analyzed every time - the equivalent of confirmation bias. I would argue that spraying attacks in the past were being ignored due to the time distribution of the events that wouldn't meet the thresholds.

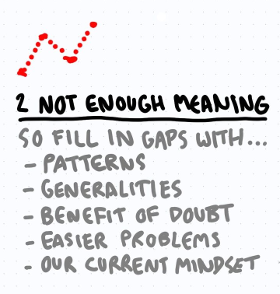

Not enough meaning

We need context to make sense of what we experience and see. Humans tend to assume behaviors based on stereotypes and generalizations. We tend to overvalue historical information, and we round / simplify numbers and percentages.

Of course, we think we know what the general context is and what others are thinking.

We need context to make sense of what we experience and see. Humans tend to assume behaviors based on stereotypes and generalizations. We tend to overvalue historical information, and we round / simplify numbers and percentages.

Of course, we think we know what the general context is and what others are thinking.

Put that down to rule analysis and you will see the similarities:

- Contextualizing the information means we enrich and correlate data based on assumptions on how systems interact, how these communicate and what we know

- Historical information is used as a basis to highlight patterns, ignoring the fact that the historical information may be giving us a wrong pattern altogether

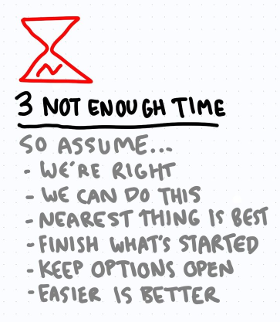

Not enough time

Overconfidence, triggered by our need to act fast, may impair our judgement. Humans tend to focus on events happening in a short time span, either in front of us or in the past. We tend to use the safest option, the solution that is less probable to cause problems and so simple solutions are often preferred.

Overconfidence, triggered by our need to act fast, may impair our judgement. Humans tend to focus on events happening in a short time span, either in front of us or in the past. We tend to use the safest option, the solution that is less probable to cause problems and so simple solutions are often preferred.

When we implement rules to analyze security logs, we act in a similar way:

- We may set up historical trend analysis to be based on not very distant past

- Often, we do not create models to be enriched over a long time span, due to the need of immediate response

- Most companies tend to fine tune for minimization of false positives in order to lower the probability of business disruption

- As complexity is the enemy of security, we opt for simple solutions as often as possible

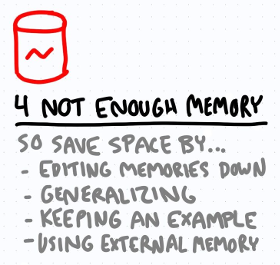

Not enough memory

Since we humans cannot remember everything, we tend to keep in our memory what is repeated. In that way we reinforce our memories. At the same time we opt to ignore specificities in favor of generalizations, and we tend to summarize the information, creating a more abstract model.

Since we humans cannot remember everything, we tend to keep in our memory what is repeated. In that way we reinforce our memories. At the same time we opt to ignore specificities in favor of generalizations, and we tend to summarize the information, creating a more abstract model.

Does that sound familiar to what we are doing when managing logs?

- Usually systems provide two storage pools: the log storage and the event storage. Event storage - and specifically storage of positive events - tends to be longer than log storage and any subsequent events will look into event storage for correlation and enrichment.

- Connectors, descriptors and generalizations are used by some systems to ingest logs from different sources through a (usually least) common denominator.

The problem with biases

Human mind biases are still a subject of discussion, study, analysis and argument. The truth is that people who have fewer biases usually lose in debates against people who have more biases; simply because the more biases one has, the less probable it is for this person to change their mind. There is no simple solution to that, except of course education, education and education.

In security log analysis some biases are even positive, wanted and intentional. One of these is the effort to suppress false positives. In the big picture, a security analyst or a team that will develop rules for event analysis, needs to come up with log correlation, thresholds, patterns and alerts. These people should be well aware of these potential biases and try their best to avoid falling for them during the design phase. The reason is that what gets into the design phase reinforces the biases; making escape almost impossible. That would lead in security events go unnoticed.

It is also very important to have an independent review of these rules quite often. A third party, a new employee, someone who was not part of the previous rule definition has the potential to offer a fresh view, hopefully eliminating some of these biases.

Finally, when you look for your next SIEM solution, you might want to ask for the implementation team provided by the vendor to be a new one, consisting of people who have never worked together before. This would be one way to avoid human biases such as Anchoring, Choice-supportive bias and being bitten by the Continued influence effect among others.

Other ideas to overcome bias in security event analysis?

Illustrations either original by [@buster https://medium.com/thinking-is-hard] or slightly adapted